ahrefs vs SEMrush SEO tool for Shopify stores. Which is better?

ahrefs vs SEMrush again? Yet another X vs Y SEO tool comparison?

Well kind of.

I’m not going to get into the nitty gritty of each tool and what they do, which one I like better and why, mostly because I use both and find different value or pros & cons with both.

What I am going to share 1 huge difference between ahrefs and SEMrush for those who need to use one of these (or other tools) with a Shopify store and only want to (or can afford) to use one or the other. If I had to guess, I’d say that probably describes quite a few of the customers of Shopify’s self-serve e-commerce hosting platform.

So what’s the difference?

Let’s get right to it, shall we? The problem is Shopify’s aggressive implementation of crawl-delay throttling & IP rate limiting – or more specifically how ahrefs and SEMrush handle it.

If you know what that means, feel free to skip ahead to how these tools handle it. If you don’t you’ll want to get a quick primer to understand it better so read on.

What is crawl throttling & IP rate limiting?

If you don’t know what that means, here’s a quick primer:

When any bot (such as Googlebot or the bots various SEO tools use, among other bots) crawls a website they can use up a significant amount of server resources, even to the point it can significantly impact the server performance.

To combat the negative effects of this, which could include making your site so slow nobody else can access it, hosting & SaaS providers employ methods to limit the bot crawl rate.

1 such way is to use the Crawl-delay directive in your robots.txt file. Well behaved bots will respect it and not crawl faster than you want them to. That isn’t the case with all bots though, and as an SEO you may already know that’s a good thing. Some times you want to be able to ignore the robots.txt directives.

So if a bot is ignoring these directives or the robots.txt isn’t set up correctly, they can still overload the server. Enter option number 2.

Another method is to monitor the speed of requests coming from specific IP addresses and block that IP temporarily when you think it’s going to overload your server. This is a basic way to explain what Shopify is doing.

Simply put, what this means is that Shopify is enforcing an IP based limit on the number of requests over a set amount of time and blocking those who exceed it.

This is not necessarily a bad thing. It can help survive thwart attacks and scraping as a couple examples. But, it can be really annoying when you’re using an SEO tool like ahrefs or SEMrush among others.

How do ahrefs & SEMrush handle crawl-delay & IP rate limiting?

Both of these SEO tools give you some controls over the crawl speed, but they approach it in different ways. Let’s not confuse crawl speed with the crawl-delay directive though.

The directive is your request for any given bot to limit their crawl speed. It’s up to the bot to choose whether they want to respect your request. Some times this works as well as when that one guy who is always ripping past your house in his ’89 Camaro won’t stop even though you’ve kindly asked him to slow down repeatedly.

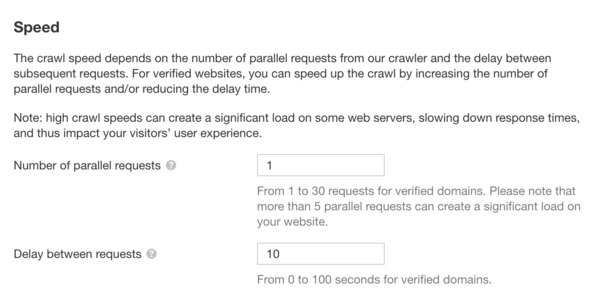

How ahrefs handles crawl speed

ahrefs allows you to control the crawl speed pretty granularly for verified domains because they assume you are in control of the server, at least in the sense that if you don’t mind having the performance impacted it’s your choice because it’s your site.

You can set the number of parallel (simultaneous) requests and the delay between subsequent requests for each parallel thread. This means if you set it to 30 parallel requests with a 0 second delay you are emulating what amounts to 30 people with a high-speed internet connection mashing the refresh button over and over and over.

Yeah, I could see that being a problem. Fortunately, you can control it with the Crawl-delay directive on your server and via the crawl settings in ahrefs:

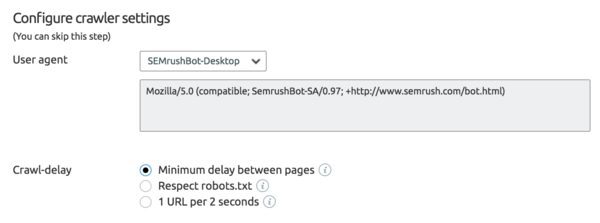

How SEMrush handles crawl speed

SEMrush employs a different, less granular method to control the crawl speed with 3 choices you can pick from. As you’ll see a bit further on, this can cause a number of problems including the inability for SEMrush to crawl your Shopify site completely.

The problem with this method specifically for Shopify is that even 1 URL per 2 seconds (which is the slowest you can set it to manually) is too fast and results in the SEMrushbot being IP blocked and causing a crawl fail on my site – all of 4 products, a few pages and blog posts. It’s a non-starter for a larger client site.

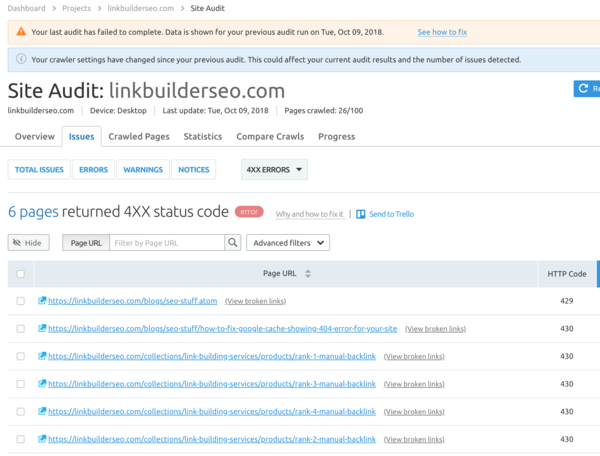

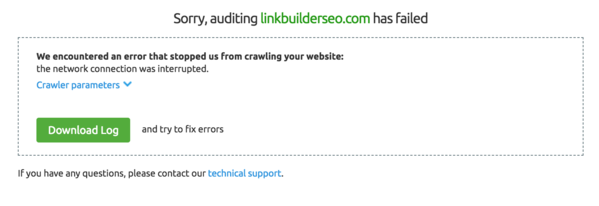

See what happens when SEMrush tries to crawl Shopify

Some times SEMrush will start a crawl and report a high percentage of HTTP 429 and/or 430 status codes:

Other times it will fail to crawl completely:

Shopify is not being flexible, but SEMrush is ultimately at fault

After encountering these problems and finding out what was causing the error, I figured I would do some further reading about it and contact both Shopify and SEMrush support.

News flash – neither seems to care. I’m a small fry, I know. But I’m not the only one. Come to find out, this is a pretty common problem and neither Shopify or SEMrush seem to want to resolve it.

What Shopify support suggests as a fix

Shopify’s support rep basically said that the tool needs to be throttled and pointed me to a support thread where someone else is using SEOprofiler successfully after changing their crawl speed settings:

This isn’t something that we’re able to change on Shopify’s end, so you’ll want to reach out to the SEMrush support team to see if there’s a way to further slow down these requests. The merchant in the forum post had success with one request per 30 seconds.

Reading between the lines: “use a different tool or contact SEMrush.”

Interestingly, Shopify have implemented Crawl-Delay settings for Majestic’s MJ12Bot which gathers data for their link database SEO tool.

From my own robots.txt which is automatically created by Shopify without the ability for a user to change or add to it in any way:

User-agent: MJ12bot

Crawl-Delay: 10I suggested that since they’re clearly giving this directive to one SEO tool, perhaps it wouldn’t be that much more work to add the handful of other larger ones.

Or allow editing of the file in a manner to append your own Crawl-delay directives via the theme code editor.

I’m not holding my breath, it doesn’t seem like they care, which is concerning when SEO is a huge focus for most e-commerce sites.

What SEMrush support suggests as a fix

Unfortunately, SEMrush has no suggestions how to fix this. From their support:

This happens because Shopify has restrictions set against our bot that interfere with the crawling process; Shopify websites could start returning 429 and 430 HTTP errors to our bot during the audits, making part of website’s pages uncrawlable.

Shopify have stated that our bot requests pages too fast, so this was a security precaution in order to minimize server load. In turn, SEMrush has implemented custom crawl delay setting for the bot in order to limit crawl speed – but Shopify still has their block in place.

I advise to specify custom crawl delay setting in your Site Audit campaign to 1 URL per 2 seconds – but I see that you’ve already done that – this should help a bit, but until Shopify reviews their policy in regards to our bot, audits of websites that are hosted by them will be inconsistent in terms of number of successfully crawled pages.

Sure, I get they can’t control Shopify. However, they can of course control their own bot and SEO tool features. But, it should not be a huge programmatic change to have another option that Shopify is OK with.

My crawls with ahrefs set to 1 parallel request every 10 seconds (albeit slow which can be a problem for larger sites) seems to work flawlessly with none of the same errors.

Where do you think I got that value from? The Shopify robots.txt Crawl-delay directive for Majestic.

Verdict: ahrefs wins for Shopify stores

The short version is to use ahrefs for SEO if you have a Shopify store and only want to pay for 1 tool, because SEMrush will be more headaches if it will work at all.

Here’s why I fault SEMrush for this more than Shopify

Shopify’s core business is to provide a reliable, well performing e-commerce platform to their clients. While they should prioritize SEO tool compatibility problems because they are a part of any serious site owner’s or SEO’s core tools, their main priority is to offer a reliable service that can’t be taken down easily by a bunch of bots.

SEMrush on the other hand is purely an SEO tool. The ability to crawl any site is of paramount importance to an SEO. Their core tool is about site data which needs to be crawled from various sources – their focus should be providing information and making sure their tool isn’t easily blocked or causing performance issues, whether inadvertently or not.

Additionally, SEO (at least to me) is partially about looking for, finding & testing out things may give you an advantage or further your knowledge. Some times that means looking in corners that others might not want you to look into, spoofing your User-agent or IP or using a VPN to see how sites respond when they think you’re somebody else, such as if a site is keyword stuffing when they think you are Googlebot. So with this, I feel strongly that the control over whether a site is crawled or not falls largely into the lap of the service provider offering the hosted crawler.

Sorry, SEMrush. I like your product quite a bit, but this is a big fail in my book.

Why I chose Shopify anyway

I have hands-on experience with various other platforms and homegrown apps, but haven’t had any significant amount with Shopify. I created this site to get that hands-on experience with the platform and because I wanted a simple way to handle orders.

All those things combined, and the fact I have an client who uses Shopify, I wanted to get my hands dirty.

I didn’t research many alternatives and just jumped right in. I figure it would be a learning experience at a minimum – which it has been in many ways in just this short time period.

Unfortunately not all I have learned is positive. I’ll continue to update my blog as I continue working with Shopify for those of you interested in following along.

Comment (1)

Hi Mika, great post, thanks, I received a lot of 430 errors when I tried to crawl the shopify using screamingfrog. It is sad that on the shopify platform there is no possibility to change all the files, I think wordprees (woocommerce) are much better than shopify and similar platforms.

Have a good one Mika.

Comments are closed.